You’re in a budget meeting. A vendor says their program boosts student outcomes by 20%. The slide sparkles, heads nod, and a small voice in your mind asks the only question that matters: twenty percent compared to what? Last year’s cohort? A different school? A handpicked group of super-engaged kids? Or is it just a nice-looking chart doing acrobatics with numbers?

Education leaders live in this moment all the time. Some claims are solid. Some are wishful thinking dressed up as math. If you can’t tell which is which, you end up spending scarce dollars and precious time on gains that won’t repeat. This article will give you a practical way to read educational program impact evaluations: what counts, what doesn’t, and how to choose the level of rigor that fits your decision and timeline.

Why the Method Behind the Number Matters

You have students to serve and calendars that never slow down. You’re triaging: curriculum adoptions, software renewals, tutoring contracts, mentoring, and SEL supports. Every choice ripples into outcomes, budgets, and equity. Boards and funders are asking harder questions. Families are, too. “Show me it works” isn’t a trap; it’s stewardship.

Here’s the rub: independent educational program evaluations are still rarer than they should be. Plenty of “proof” arrives as vendor case studies, opt-in pilots, or usage dashboards. Those are fine operational tools, but they aren’t causal evidence. If you want to understand the changes caused by a program, not just its coexistence, you need designs that facilitate a fair comparison.

That’s why we built the MomentMN Snapshot Report: a low-burden, fast, independent way to turn the data you already collect into answers you can defend. District leaders get confidence. Nonprofits get credibility with funders and partners. EdTech teams get trust that opens doors and keeps customers. That’s the lane for well-designed impact evaluations of educational services and software.

The One Concept That Changes Everything: Internal Validity

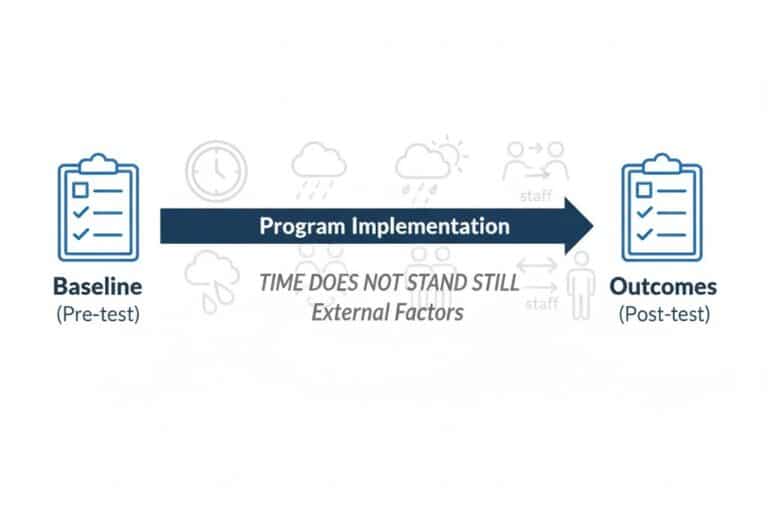

Let’s translate research-speak into plain talk. Internal validity is your confidence that the difference you see was caused by the intervention. Average math scores went up 10 points after students used a program? Internal validity asks, “Was it the program or the new teacher, the Friday tutoring block, a district push on problem-solving, or the simple fact that students had seen similar test items before?”

Picture the classic example: a second-grade intervention (call it “123 Math”) shows a +10-point gain. Looks great. But maybe five points came from regular classroom instruction. Maybe some students took a near-identical assessment twice and remembered items. If your design doesn’t rule out those “something elses,” you can be fooled by your own numbers. It’s like crediting kale for your weight loss when you were also running five miles a day.

Protecting internal validity is the job of good formative educational impact evaluations and other impact evaluations of educational interventions. It doesn’t always mean the most complex, expensive study. It means picking the design that best controls the big confounders for the decision you have to make right now.

The Rigor Ladder, Without the Fog

Think of evidence as a three-rung ladder: low, medium, high. Higher rungs stronger confidence that the program caused the change. You can make smart decisions on every rung as long as you know which rung you’re standing on.

Low: Before/After with No Comparison

This is the tempting one. Students take an assessment in September, start the program, and take the same or similar assessment in May. Scores rise. Slide appears. “It works!”

What’s lurking? History effects (students were taught all year), selection (maybe the group came from a school that also added strong teacher PD), and testing (taking similar items twice lifts scores). It looks like evidence because it has numbers, but it lacks a counterfactual what would have happened without the program. This is where impact evaluations of educational software often go sideways if they stop at pre/post. Use it for coaching and implementation check-ins, not for high-stakes decisions or marketing claims.

Medium: Quasi-Experimental Designs

Add a comparison group students who didn’t receive the intervention and measure both groups at the same times. Make the groups as similar as possible on observable factors: prior scores, attendance, demographics. Sometimes you’ll hear “non-equivalent comparison group” because the assignment wasn’t random.

This design shrinks the big threats. History matters less when both groups experience the same year. Selection is reduced by matching and statistical controls. You’ve created a fairer test without turning your district into a laboratory. It’s not perfect; unobserved differences can remain. But this is the practical workhorse where rapid cycle educational impact evaluations often live: credible, fast, feasible.

High: Randomized Controlled Trials (RCTs)

Eligible students or classrooms are randomly assigned to treatment or control. Both groups are measured the same way over the same time. Randomization balances known and unknown factors on average, knocking out the biggest threats (history, selection) and giving you the strongest causal story. It also takes coordination and sometimes more time. When the stakes are high policy shifts, district-wide rollouts, big price tags this is the gold standard for educational program impact evaluations.

Who Needs Which Rung and When

District leaders live with renewal cycles and public accountability. When you can say, “Here’s what changed because of this program,” you protect budgets and sharpen allocations. Stronger methods mean fewer surprises, better board conversations, and more trust in data-driven decisions.

Nonprofit leaders win funding with evidence, not enthusiasm. A tight quasi-experimental study can turn a good story into sustained support by showing your impact evaluations of educational services stand up to scrutiny. You also learn where your program lands strongest: which students, which contexts, which delivery choices.

EdTech marketing leaders face buyers wary of vendor-authored white papers. Independent educational program evaluations change the posture in the room. “A neutral party found X effect in Y context” doubles as marketing collateral and product feedback.

Rigor vs. Reality: Design What You Can Run

Time and dollars are real constraints. You won’t randomize every question, and you don’t need to. Pick a design that fits the decision window:

- Choose RCTs when the decision is big, the stakes are high, and randomization is feasible.

- Choose quasi-experimental when you need credible results on a tight timeline and can’t randomize.

- Use pre/post when you’re improving delivery, not claiming impact.

Sometimes, you want the five-course tasting menu (RCT). Other times, a really good sandwich (quasi) is what you can sit down for. Just don’t call empty calories (one-group pre/post) a balanced dinner. That’s how formative educational impact evaluations earn their keep: improving while measuring, with guardrails that keep you honest.

Where Claims Usually Get Wobbly

If you start noticing these patterns, your spidey-sense is working. It doesn’t mean the program is bad. It means the design isn’t answering the causal question.

- Usage-only comparisons. “Students who completed 80% of lessons gained 10 points.” Of course they did. The go-getters look great at everything. The right question is what happened to everyone assigned, not just the champions.

- Missing baselines. If prior achievement and attendance aren’t in the model, you can’t tell whether you measured program effect or pre-existing momentum.

- Opt-in pilots. Early adopters are prepared and enthusiastic. Fantastic for learning delivery. Risky for making claims that generalize to every school.

- Context confetti. One high-capacity classroom shows a big win. Great case study. Not a guarantee elsewhere. Transfer depends on routines, time, and supports.

You’ve probably seen all four wrapped into a single PDF. That’s why impact evaluations of educational services and software lean on designs that keep comparisons fair—even when your calendar refuses to cooperate.

How to Read “Evidence” Without a PhD

You don’t need a dissertation to spot rigor. Keep five questions in your pocket for the next shiny deck:

- Was there a comparison group? If you’re seeing only “before/after for one group,” you’re seeing change, not necessarily impact.

- Was assignment random—or at least carefully matched? Random is best. If not random, look for matching on prior scores, attendance, demographics. That’s how good impact evaluations of educational software and services are built.

- Could other factors explain the results? New curriculum? Teacher PD? Schedule changes? Good designs try to neutralize these.

- How big and consistent are the effects? Tiny samples swing wildly. Look for effects that hold across grades or campuses, or in the subgroups you most need to serve.

- Who ran the study? Vendor PDFs and testimonials are signals. Proof comes from neutral methods and transparent appendices. That’s the value of independent educational program evaluations.

Two bonus checks that punch above their weight: does the treatment group include everyone assigned (not just heavy users), and did the analysis adjust for baseline differences?

From “Looks Great” to “Holds Up”: A Clear Picture

Say a district pilots a reading intervention across eight schools. The vendor’s dashboard spotlights a familiar plot: students who completed at least 75% of modules grew more than those who didn’t. That’s a usage story, not an impact story.

Now run a MomentMN Snapshot:

- Define treatment by assignment, not usage. Everyone who was supposed to use the program counts, even if their usage lagged.

- Match or control for prior reading scores, attendance, English learner status, disability, and free/reduced lunch.

- Estimate subgroup effects. Maybe the biggest gains appear for Grades 3–4 and for newcomers to the district, while effects are modest for students already reading above grade level.

What you learn: the overall impact is positive but smaller than the usage slide suggested. It’s strongest where protected practice time exists and alignment to need is clearest. That’s enough to renew—with a focused scale plan and concrete supports for implementation. It’s also honest. Boards appreciate both.

That’s quantitative evidence you can defend. That’s what educational program impact evaluations are supposed to deliver.

Choosing the Right Rung for the Decision in Front of You

You don’t need an RCT for every choice. You do need the habit of asking, “What would have happened otherwise?” Then you pick the best design you can run now.

- Renewing or scaling district-wide? Use the strongest design you can run this term RCT if feasible; tight quasi-experimental if not.

- Improving delivery mid-year? Use formative educational impact evaluations with matched comparisons. Adjust and retest.

- Writing marketing claims or grant proposals? Bring independence. Even a compact quasi-experimental evaluation changes the conversation.

When time is tight and it’s always tight rapid cycle educational impact evaluations help you learn in weeks, not semesters. The answer doesn’t need to be encyclopedic; it needs to be fair and fast enough to inform a decision while the window is open.

Quick Self-Audit You Can Use Tomorrow

Take thirty seconds before the next decision:

- Did we include all assigned students or only heavy users?

- Are baseline differences in the model?

- Do effects hold across sites or just one high-capacity campus?

- Is our “evidence” mostly testimonials or vendor slides without a methods appendix?

- Would a board member reasonably ask, “How do you know it wasn’t just the motivated students or the new schedule?”

Two or more yeses? Your “impact” is probably inflated. You’re close to credible. A small design tweak or an independent Snapshot gets you the rest of the way.

If You Only Remember Three Things

- Impact is about the counterfactual. Compared to what? A good design answers that without hand-waving.

- Assignment beats usage for causal claims. Use intent-to-treat, then explore dosage for implementation insights.

- Independence buys trust. When results come from a neutral party, skeptics lean in instead of away.

See What a MomentMN Snapshot Report Provides Your Team

You don’t need to become a statistician to make strong, defensible choices, but you do need a way to tell the difference between marketing momentum and measurable change. That’s the promise of independent educational program evaluations and the point of the MomentMN Snapshot Report: fast, fair, and built for real-world constraints. We help you choose the right rung on the rigor ladder, run comparisons that hold up, and turn your existing data into decisions you can stand behind.

If you want clarity instead of glitter, let’s talk. We’ll show you a sample Snapshot, walk through how a rapid cycle educational impact evaluation fits your timeline, and outline what it would take to answer your specific question—district renewal, nonprofit grant, or EdTech case study. Your students and your stakeholders deserve programs proven to work, not just programs that look good on a slide.If you’re ready to see what a MomentMN Snapshot can do for you, reach out to us today. We’ll meet you where you are, keep the lift light, and help you move from hopeful to defensible on time, and in plain language.