Your development team is celebrating a new feature release. Sales wants a case study. District leaders want proof. Your inbox is full of feedback, some glowing, some confusing. The question hanging over every sprint is simple enough to make you wince: Does this actually help students?

If it does, show it. If it doesn’t, fix it before renewal season does it for you.

Many EdTech products cycle through development without clear, independent evidence of impact. The result is familiar. Trust gets shaky. Renewals stall. Funding opportunities go to competitors with stronger proof.

We’ll walk through how educational program impact evaluations fit cleanly into every stage of the product development lifecycle. We will show how rapid-cycle educational impact evaluations help you learn fast, how formative educational impact evaluations keep teams building the right thing, and how impact evaluations of educational software become the backbone of credible marketing and renewal conversations.

The goal isn’t academic theater. It’s a practical system that turns product decisions into outcomes you can defend.

Why Evaluation Belongs in the Product Lifecycle

Evaluation is often treated like a final stamp of approval. Ship the thing, collect a few testimonials, and then go hunting for a white paper. In the real world, evaluation does its best work earlier and more often. It drives iteration, sharpens messaging, and accelerates adoption because it answers the questions that matter to the people who buy and use your product.

Let’s clear the fog:

It is not a compliance box you check for a grant report.

It is not something you dust off once a year for a marketing PDF.

The real payoff looks different. You pivot faster because you have real user signal, not vibes. You improve credibility with districts because you can show how outcomes change for specific grades, schools, and subgroups. Your product roadmap stabilizes because leadership has proof that a certain feature moves the needle and another one does not.

This is where the MomentMN Snapshot Report enters as a practical option. It is an independent educational evaluation that seamlessly integrates into your team’s ongoing cycles without disrupting their calendar. Think of it as a compact, third-party check that keeps you honest and helps your partners feel confident. When your partners see that you invest in independent educational program evaluations, they relax a little. They see you’re not just selling; you’re measuring the metrics they use to decide.

The Lifecycle Phases: Where Evaluation Fits

Below is a simple walkthrough of the EdTech lifecycle with evaluation stitched into each stage. The intent is to keep the lift light while you get answers that move the work forward.

Ideation and Early Development

Goal: Decide if the problem is worth solving, and if your proposed approach makes sense to teachers and students.

Evaluation role: Start with needs assessments and quick cycles of feedback. Bring teachers into short, structured interviews. Put clickable prototypes in front of small student groups. Capture pain points in plain language. You are not trying to prove a large effect size. You are trying to see if the scaffolding holds.

A few tight habits help here. Write down your hypotheses and the behaviors you expect to see in classrooms. Define success signals that are small enough to measure within days, not quarters. Pull light data from existing workflows. Time-on-task from your logs, a tiny pre/post for one skill, a teacher rubric that tags confusion points. Early signal keeps you from scaling the wrong idea.

Pilot and Prototype Testing

Goal: Validate usability and check for short-term outcomes that justify a broader launch.

Evaluation role: This is where rapid-cycle educational impact evaluations shine. The aim is to quickly identify what is working and what is falling short, using small samples and short timeframes. You are not building a winter-long trial. You are running sprints with a clear question. Does this reading routine improve weekly fluency for second graders who start below benchmark? Does the new feedback widget nudge middle school usage by five minutes per day?

Keep the burden low for classroom partners. Align check-ins with existing staff meetings. Use the assessments teachers already trust when possible. Share interim findings in one-page updates so teachers see themselves in the story. These cycles give product teams confidence to prioritize features that move real outcomes. They also help you retire shiny features that do not.

Launch and Market Entry

Goal: Prove value to districts and funders in a way that stands up to scrutiny.

Evaluation role: Independent third-party evidence is the lever here. Educational program impact evaluations conducted by a neutral party do double duty. They guide your next sprint, and they serve as persuasive collateral. The difference between “our internal dashboard shows improvement” and “an external analyst found a measurable effect in real classrooms” is the difference between a polite nod and a serious procurement conversation.

Districts do not distrust you out of spite. They are responsible stewards of public dollars. They must show how the tool supports outcomes for specific groups of students and in the contexts they manage. That is why vendor claims and independent validation land differently. When the story is told by a neutral evaluator, administrators can take it to the board with a straight face.

Growth and Scaling

Goal: Expand adoption across districts and into new regions.

Evaluation role: As you grow, your evaluation questions shift from “does this work at all” to “where does this work best.” Larger quasi-experimental studies answer that well. Subgroup analyses help you identify the combination of grade band, schedule, and staffing that produces the strongest effects.

Grade band, baseline proficiency, EL status, FRL status show where it lands strongest. This is how educational program impact evaluations support state and national growth. You earn the right to claim broader effectiveness because you can show patterns, not just anecdotes.

This stage is ripe for strategic storytelling. Pair a clean main effect with clear subgroup results. Show how a district with limited instructional minutes still saw gains because implementation focused on a few high-yield routines. Funders notice that level of specificity. So do curriculum and instruction teams, who need to choose what to protect in the schedule.

Maturity and Renewal

Goal: Maintain market share and support district renewal cycles in tight budget environments.

Evaluation role: Keep the MomentMN Snapshot Report in rotation as a continuous, lightweight evidence update. Renewal season is stressful for everyone. Swap “convince” for “co-plan.” Bring a report and leave with a renewal plan that everyone can explain. When you can hand a superintendent a short report that uses the district’s own data to show outcomes, you change the tone of the meeting.

Instead of persuading, you are collaborating. Together, you identify product refinements that sustain outcomes next year. Maybe a grade level needs a different cadence. Maybe a subgroup needs specific support. You are not guessing. You are using evidence.

The Three Lenses of Evaluation

Think of evaluation as a prism rather than a single lens. Different angles produce different kinds of clarity. Here’s a look at each one, including what makes them distinct and why they’re used.

Formative Lens: To Build Better Products

Keep the reflection, cut the five-minute monologue. Early evaluations answer three questions. What is working? What is confusing? Where do we iterate first? With an SEL app, for example, formative educational impact evaluations might reveal that students respond well to short reflection prompts but ignore longer ones after lunch.

Teachers might share that the off-screen debrief is the real engine of growth, which means your product needs better teacher-facing cues. None of this requires a semester-long study. It does require honest loops with the classroom.

Summative Lens: To Prove It Works

As you transition from building to scaling, you need to show measurable outcomes. Fair comparisons, fast enough to matter. Quasi-experimental designs compare students who use the product to similar students who do not, while controlling for baseline differences. Randomized designs go a step further by assigning classrooms or students by lottery.

Both options lift confidence because they make a fair comparison. Districts, nonprofits, and funders look for this level of rigor when dollars and schedules are on the line. This is where educational program impact evaluations and impact evaluations of educational interventions move from “nice to have” to “non-negotiable.”

Marketing Lens: To Earn Trust and Credibility

Because it answers the follow-ups before procurement asks them. Independent results pull double duty in the field. A clean effect estimate is marketing gold, and it reads differently from a testimonial. Case studies built on impact evaluations of educational interventions outperform generic quotes because they carry weight.

A director of curriculum can see the method, the baseline adjustment, the subgroup patterns, and the practical conditions that made the result happen. That is the kind of evidence people remember when they are in a renewal meeting.

What Happens Without Evaluation

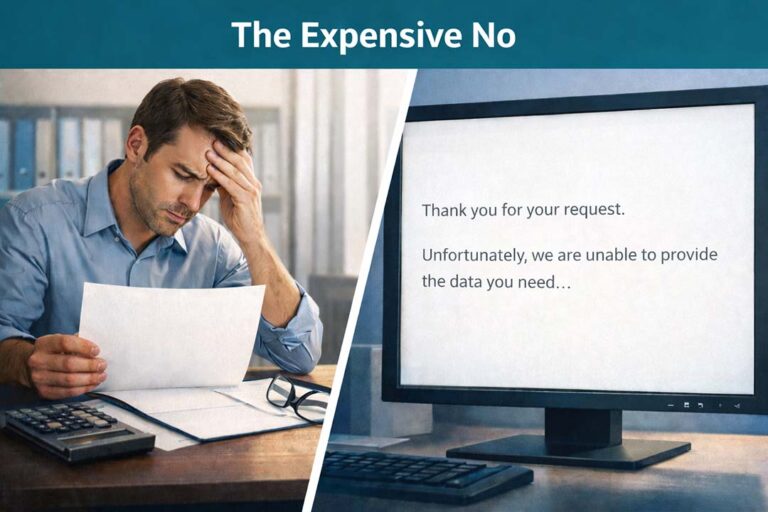

Skipping evaluation not only risks a bad slide, but it can also risk your product’s future. Products stall after pilot. Enthusiastic early adopters can carry a tool for a semester, but without measured outcomes, momentum fades.

Districts drop renewals because ROI never made it past the dashboard. It is not personal. Budgets are real.

Nonprofits lose grant opportunities when they cannot show independent evidence of impact. Even the best stories need numbers that hold up.

Without evaluation, you are basically waving your arms in the boardroom, saying, “Trust me, it works.” Spoiler: most superintendents do not buy it. Fine for anecdotes. Not for procurement.

Practical Roadmap for EdTech Leaders

Here is a simple playbook. Print it, share it, and run it.

- Early stage: Run small, rapid-cycle educational impact evaluations with minimal data burden. Tie each cycle to a single, testable hypothesis. Bring teachers into the loop for quick checks.

- Ready to launch: Invest in an independent educational program evaluation for credibility. A third-party report beats internal dashboards every time.

- Scaling: Use impact evaluations of educational services to show broader effectiveness. Include subgroup analyses and site-level variation. That is how you move from one strong district to many.

- Facing renewals: Request a MomentMN Snapshot Report to provide districts with ROI evidence built on their own data. Keep the conversation focused on results and next steps instead of persuasion.

District leaders now expect vendors to bring their own evidence or to respond positively when a district requests an evaluation. If you cannot, your competitor will.

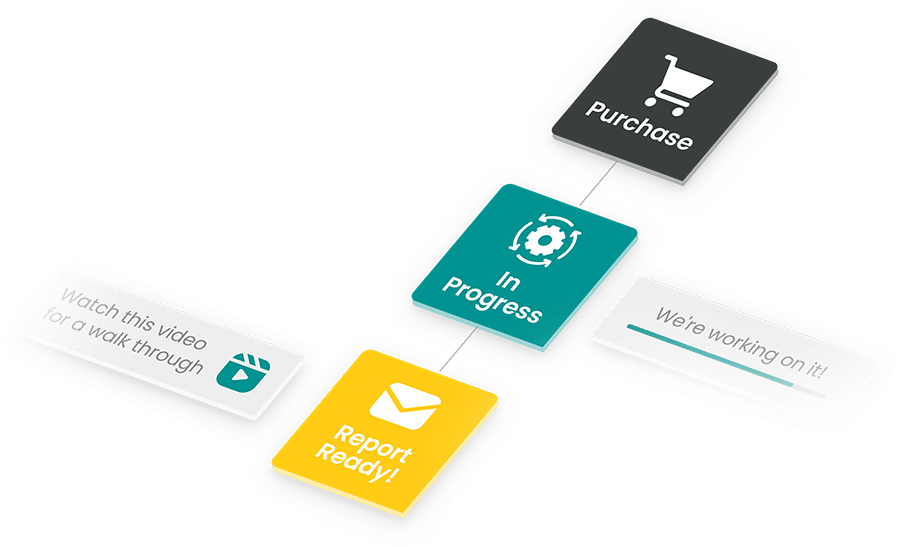

How the MomentMN Snapshot Report Fits

It’s Fast

You need answers in weeks, not semesters. The MomentMN Snapshot Report delivers a focused analysis that aligns with active decision windows. That means you can bring a result to a cabinet meeting on time, not after the budget is already set.

Always Independent

Results land differently when they come from a neutral party. A superintendent can take independent educational program evaluations into a board meeting without flinching because the methods are transparent and the analysis is not vendor-authored.

Effective, Yet Low-Burden

Most districts already collect the data needed to answer high-value questions. We use what exists. Your partners do not need to create new tests or launch a parallel system of record.

Easy and Flexible

The Snapshot works at multiple lifecycle stages. Early on, it looks like a quick, rapid-cycle educational impact evaluation to sort signal from noise. During launch and growth, it becomes the compact proof point that district buyers ask for.

In renewal season, it functions as a short, clean update that confirms outcomes and flags where implementation support will matter most. You can also position it alongside your internal analytics to create a single story: usage patterns in your product, outcomes in the district’s system.

It’s Time to Get Some Perspective

If you are ready to move from hope to evidence, we would love to talk. A MomentMN Snapshot Report will show you what is working, for whom, and how to prove it. We will keep the process light, the language plain, and the answers useful, because your product, your students, and your stakeholders deserve more than guesswork.