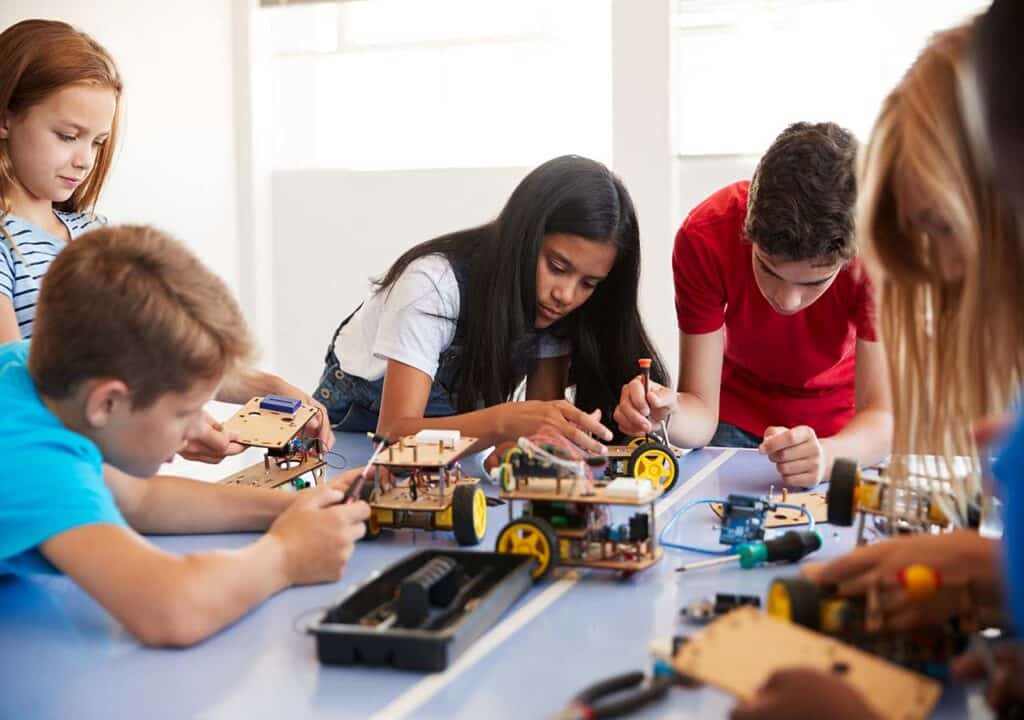

We’ve all heard the glowing success story. A teacher tries a new program in her classroom. She swears by it. The kids are thriving, test scores are up, and the classroom culture is vibrant. Maybe there’s a local news story or a social media post that makes the rounds.

It feels good, sounds good—so it must be good, right? But if you’re a district leader, an EdTech executive, or the head of an educational nonprofit, you’ve probably asked the next question: is it actually good for all students? Or did it just work in that one room, with that one teacher, under that one set of circumstances?

In K-12 education, stories travel fast. They’re memorable. They’re sticky. But stories aren’t strategies. When you’re responsible for deciding whether to renew a multi-million-dollar contract for a learning platform or advocate for a new service to support students, feel-good stories won’t cut it. Anecdotes might win you a meeting, but they won’t win you confidence—not from your school board, not from funders, and certainly not from the taxpayers who want to know where their dollars are going.

That’s where quantitative data in education comes in. Objective, scalable, and most importantly, trustworthy, quantitative data is what separates speculation from strategy. It’s how district leaders move from “it worked once” to “it works, full stop.” It’s how nonprofits prove their programs are worth continued funding. And it’s how EdTech companies show real value—not just polished marketing.

The Limitations of Anecdotes in Education

Anecdotes are easy to love. They’re short, personal, and often uplifting. A single teacher’s experience with a new curriculum tool. A student who turns their grades around after joining an intervention program. These stories paint vivid pictures—but they’re just that: pictures, not the full landscape.

Anecdotes stick around in education because they hit us where it counts: emotionally. Humans are wired to connect with stories. They’re easy to tell, easy to remember, and they confirm what we hope is true. “See? The program works!” It’s satisfying. But it’s also incomplete.

The problem is, anecdotes are limited by nature. They capture a single experience—or maybe a handful of them—not a trend. What works in one classroom may flop in another. A rockstar teacher can make just about anything shine. We’ve seen the “one amazing teacher effect,” where the real variable wasn’t the tool, but the talent. That’s not a replicable strategy for a district of 30,000 students.

The other risk? Cherry-picking. When decisions are based on stories, it’s all too easy to gather the examples that make a program look good, while quietly ignoring the times it didn’t work. That’s a dangerous game when the stakes are as high as they are in public education.

As we like to say, “Anecdotes are great for newsletters. Quantitative data is better for million-dollar decisions.” If you’re serious about measuring education program success, you need more than a few good stories. You need evidence that stands up across classrooms, schools, and student subgroups—not just a single, shining example.

What is Quantitative Data and Why Should You Care?

When we talk about quantitative data in education, we’re talking about numbers, not narratives. It’s the kind of data that can be measured, counted, and compared. That means things like standardized test scores, benchmark assessments, attendance records, behavior incident counts, and engagement metrics from educational software.

The benefits of quantitative data are straightforward but powerful. First, it’s objective. Numbers don’t have personal biases or rose-colored glasses. They tell the story as it is. Second, it’s scalable. One teacher’s experience is valuable, but quantitative data lets you see patterns across entire districts.

And then there’s equity. Quantitative data lets you see not just whether a program works, but who it works for. Are English learners benefiting at the same rate as their peers? What about students with disabilities? Without the numbers, it’s all too easy to miss these critical nuances.

Finally, quantitative data enables comparability. You can actually weigh one program’s outcomes against another’s. Apples to apples. That’s a critical advantage when you’re deciding which interventions or products to invest in. It’s also why so many district leaders, nonprofits, and EdTech companies rely on formative educational impact evaluations to guide their next moves.

Why Educational Leaders Need Quantitative Evidence—Now More Than Ever

We’re living in the age of accountability. Superintendents, school boards, and funders want evidence. And rightfully so.

Districts are operating under tighter budgets than ever. Every dollar has to count, and every investment has to be justified. Public scrutiny is constant. Parents, community members, and local media are paying attention. And at the end of the day, these aren’t just budget decisions. They’re student success decisions. You can’t afford to guess.

That’s where the MomentMN Snapshot Report comes in. Our rapid-cycle educational impact evaluations give you fast, actionable insights. We’re not talking about studies that take a year and a research team of 12 to complete. Our approach is nimble, leveraging data you already have to produce clear findings quickly.

We also provide independent educational program evaluations, which is a fancy way of saying: we’re not here to make your program look good just because you paid us. Our independence is your credibility. When you present findings to your board or funders, that objectivity speaks volumes.

And we don’t just drop a data file in your inbox and call it a day. Our reports are designed to be visually digestible. Charts that make sense, insights that matter, and summaries you can actually use to explain what’s working, what’s not, and what’s next. That’s what makes our evaluations a core asset for data-driven school district decisions and for any educational leader trying to show a clear education program ROI.

When Anecdotes Led to Waste—And Data Led to Results

Let’s look at an example. A large district invested in a widely-hyped reading intervention. The pilot teacher raved about it, and the district leadership, swayed by these testimonials, greenlit a district-wide rollout. But a year in, test scores hadn’t budged. The program wasn’t scaling because the pilot success was tied to that one teacher’s unique approach, not the intervention itself. The cost? Hundreds of thousands of dollars and a year of missed opportunity for students who needed real support.

Contrast that with a district that partnered with us. Before expanding an intervention, they commissioned a Snapshot Report. We used their own data to evaluate the impact across different schools, demographics, and prior achievement levels. Turns out, the program was driving gains—but only in specific contexts. With that knowledge, the district scaled the program strategically, adapting support where needed. The result? Better outcomes, smarter spending, and no headlines about wasted funds.

That’s the difference between impact evaluations of educational software based on anecdotes and those built on data. That’s the power of knowing—not guessing—when it comes to measuring education program success.

How to Start Shifting From Stories to Data

If you’re ready to trade anecdotes for answers, you might be wondering—where do I even begin? Shifting from stories to data doesn’t have to mean reinventing your entire decision-making process. In fact, it’s a lot more about being strategic with what you’re already doing—just smarter, sharper, and yes, more data-driven. Here’s a practical roadmap to get started, no Ph.D. in statistics required.

Step 1: Identify What You Want to Measure

First, you need to know what success looks like. That starts by asking a deceptively simple question: What do I want to know? Are you trying to measure academic growth? Maybe attendance? Student engagement? Behavior improvements?

The key here is alignment. The metrics you choose should map directly to your district’s or organization’s goals—not just what’s easy to measure. If your district is focused on literacy gains in K-3, zeroing in on reading proficiency makes sense. If you’re an educational nonprofit running after-school programs, maybe you care about attendance and engagement rates. For EdTech companies, you’ll want to measure software usage patterns and learning gains across diverse user groups.

No matter what you pick, being intentional about your metrics ensures that your evaluation isn’t just data for data’s sake. You’re gathering evidence that answers the specific questions your leadership, funders, or partners are already asking—or should be.

Step 2: Prioritize Programs and Services Where You Lack Data

Let’s be honest: not everything needs a deep-dive evaluation. But some things absolutely do. Start by looking at your highest-cost programs, your most widely used services, or those up for renewal soon. These are the obvious candidates for formative educational impact evaluations.

If you’re a district leader, ask: Which curriculum tools, digital platforms, or interventions are eating up the biggest slice of your budget pie? Which ones are teachers clamoring to keep—or equally, begging to replace?

For nonprofits, the question might be: Which of our programs do we trumpet most to funders…and do we actually have numbers to back up those claims?

And if you’re leading marketing at an EdTech company, you might wonder: Which products are underperforming in retention, or where are we losing deals because we can’t prove our impact?

This is where data gaps become glaring. And those gaps? They’re opportunities.

Step 3: Partner with an Independent Evaluator

Here’s where we see a lot of folks go wrong: they try to handle evaluations in-house. On the surface, that makes sense. You’ve got smart people. You’ve got data. Why not just crunch it yourselves?

But here’s the problem: internal evaluations can have built-in bias. No matter how well-intentioned, your team has skin in the game. That makes it hard to ensure credibility when presenting findings to an outside audience. That’s why independent educational program evaluations matter.

An external partner like Parsimony, through our MomentMN Snapshot Reports, brings objectivity to the table. We’re not here to prove a program works just because you love it—we’re here to find out if it actually does. That credibility makes your data persuasive, not just presentable.

Step 4: Use Rapid-Cycle Evaluations

You don’t have time for a three-year longitudinal study. You need insights that can guide decisions next quarter—or next budget cycle. That’s why rapid-cycle educational impact evaluations exist.

Think of rapid-cycle evaluations like a pit stop in a race. You’re still moving forward, but you’re checking your systems, adjusting strategy, and optimizing performance—all while staying in the game.

Our Snapshot Reports are built for this. We leverage the data you’re already collecting—no extra surveys, no extra burdens—to deliver insights quickly. That means you get the data you need when it still matters, not two years after the decision window has closed.

Step 5: Communicate the Findings Effectively

Finally, what good is data if it sits on a shelf—or worse, in a password-protected PDF that no one ever opens again?

The final step is to ensure that your findings are not confined to the research department. They should be actively used in board meetings, community updates, donor reports, and vendor negotiations. Transparency builds trust. Whether you’re proving ROI to funders, justifying budget allocations to school boards, or showing your district partners the real impact of your EdTech product, effective communication is where the rubber meets the road.

We design our reports with this in mind: clear visuals, plain language, and key takeaways that help you not just understand the data, but use it.

The Future is Data-Informed, Not Data-Overwhelmed

No one is asking you to become a data scientist overnight. The goal here isn’t to drown in spreadsheets or get seduced by fancy dashboards with more filters than a coffee shop.

It’s about having just the right amount of evidence to:

- Make smarter decisions

- Prove ROI to funders and boards

- Improve student outcomes in meaningful, measurable ways

As we like to say, “Data doesn’t replace professional judgment, but it sharpens it.” You don’t need to abandon your instincts or experience. You just need the right data to support them.

When it comes to evidence-based education decisions, the difference between an educated guess and a decision is data. And not just any data—your data, analyzed independently, and made usable for real-world action. That’s how we help districts, nonprofits, and EdTech companies move from gut feelings to grounded leadership.If you’re ready to see what that looks like in practice, we’d love to show you. Get a sample MomentMN Snapshot Report to see how we can help translate your existing data into clear, actionable insights.