When a district leader says, Show me the data, what they often receive is a polished report, full of impressive outcomes from a handful of students in just the right setting. The graphs are clean. The language is confident. The results? Glowing.

But there’s a catch. Most of that data comes from what’s called an efficacy study. And while those results might look promising, they only tell part of the story.

In public education, the stakes are high. Leaders are making real decisions that affect real students, such as decisions about which tools to fund, which interventions to scale, and which programs to sunset. These aren’t just budget choices. They’re equity decisions. They’re student-outcome decisions. And that means the type of research behind the data matters.

Knowing the difference between an efficacy study and an effectiveness study can be the difference between spending smart and spending blind.

MomentMN Snapshot Reports are designed for exactly this kind of real-world need. We don’t test in perfect conditions. We focus on how programs actually perform in classrooms that face daily disruptions, shifting needs, and real-life complexity. Our Snapshot Reports are built to reflect that.

Let’s break down what sets efficacy and effectiveness studies apart—and why it matters more than ever.

What Is an Efficacy Study?

As hinted at in the name, an efficacy study asks an important question: Can this program or product work when everything is going right?

For this reason, these studies are typically conducted under near-laboratory conditions. Researchers control as many variables as possible. The sample is small. The rollout is closely monitored. The students involved may be selected for consistency. In some cases, teachers get extra training or support. In others, the program is delivered by the research team itself to ensure that they control all the variables and run a nearly perfect experiment.

According to the Institute of Education Sciences, most studies still focus on efficacy—testing under ideal conditions, rather than effectiveness in everyday school settings. Yet real-world decision-making depends on that very kind of evidence.

In education, you might see this kind of study in a limited pilot. For example, let’s say a new reading software gets tested with three classrooms of high-performing fifth graders. The students have reliable internet access. The teachers are enthusiastic. The implementation sticks close to the script.

Not surprisingly, the results look good.

And that’s the value of an efficacy study. It gives developers, funders, and early adopters a sense of what the program could do. It helps get ideas off the ground. It lays the foundation for early marketing. In other words, it shows what’s possible when every piece falls into place.

Here’s the catch: real classrooms aren’t controlled labs. Most districts aren’t working in a vacuum. Classrooms are messy. Staff turnover happens. Tech support is spotty. Students come with different needs and life circumstances. Rarely do those in the education industry ever have a perfect scenario with ideal results.

So the real question becomes: Will this program still work in your schools, with your students and teachers?

That’s where efficacy ends and effectiveness begins.

What Is an Effectiveness Study?

An effectiveness study examines how a program performs in actual schools under real-world conditions and whether it improves outcomes. No perfect test group. No cherry-picked data. Just the reality of daily life in a busy district.

Let’s say your district introduces a new ELA curriculum across its middle schools. The rollout isn’t flawless. Some teachers dive in headfirst. Others struggle. A few classes miss a week of instruction due to snow days. Still, the program is used, and the district wants to know what impact—if any—it’s having.

That’s where an effectiveness study shines.

It uses existing data—things like attendance, grades, behavior records, and benchmark assessments—to assess whether the program is helping. There are no new surveys to fill out. No extra testing required. No artificial conditions added in. Teachers teach the way they normally do. Students use the program as part of their regular day.

For district leaders, nonprofit partners, and EdTech providers, this type of educational impact evaluation answers the question that matters most: Is this working in the real world? Sure, it’s nice to know if everything works under perfect conditions, but decision-makers need to see if it will work in other situations as well.

Effectiveness studies align more closely with policy decisions because district leaders care most about the overall impact, rather than perfect implementation. They need to know if a program works across real classrooms, with all the variability that comes with them. That’s precisely where our focus lies.

Our MomentMN Snapshot Reports are built on real data, pulled from actual school settings, and analyzed to give clear, decision-ready insights. They’re fast, low-burden, and tailor-made for teams who don’t have time to wait a year for answers.

Key Differences Between Efficacy and Effectiveness

The easiest way to think about it? Efficacy shows what could work if every variable is accounted for and controlled. Effectiveness shows what does, not just when the environment is perfectly curated, but when you’re applying what you’re using into real-life situations with your own students and teachers.

And when it’s time to make a decision—renew a contract, scale a pilot, or fund a new tool—effectiveness matters more. Because without data grounded in your district’s reality, you’re left guessing. Or worse, you end up experiencing the exact opposite of what you expect when you actually implement your new tool or program.

One more thing: Many programs that perform well in controlled settings fall apart when scaled. It’s not always a flaw in the product. Sometimes, it’s just the difference between theory and practice. Between a hand-picked classroom and a district with real constraints.

That’s why impact evaluations of educational services that focus on effectiveness are essential. They help teams avoid costly missteps, direct funds to what’s working, and make the kind of smart, student-centered choices that lead to long-term success.

Why This Distinction Matters for Your Role

Now that we’ve defined the difference, let’s look at how this distinction impacts the people actually making decisions every day.

For District Leaders (Director of Curriculum & Instruction)

When you’re managing a district’s instructional strategy, you don’t have time for guesswork. Every decision—from textbook adoptions to digital tools—carries a price tag, a public expectation, and a potential outcome that directly affects students. That means your choices need to be rooted in real, local evidence.

Sure, a program might boast glowing reviews and a few flashy graphs from a pilot study, but if that data comes from a different highly controlled context, it’s not enough to support your next big move. You need to know: Is it working here?

This is where effectiveness studies come in. And it’s exactly what the MomentMN Snapshot Report delivers: clear, independent educational program evaluations built on your district’s own data, not on someone else’s theory.

Here’s how that helps you:

- Make data-driven education decisions: You’ll know what’s working and where, not just in general, but in your schools.

- Justify purchasing decisions: Show your board or superintendent that you’re backing investments with evidence.

- Demonstrate ROI: When budgets are tight, you need to prove that the resources you’re using are actually driving results.

- Streamline impact evaluations of educational interventions: Without draining your team’s time or attention, you’ll get clarity on what’s helping students move forward.

- Support equitable outcomes: Our reports allow for subgroup analysis, helping you spot where interventions are truly closing gaps.

And all of this comes without burdening your already busy staff. No extra surveys. No clunky data requests. Just fast, focused answers built from what you already track. That lets your team stay focused on students while we handle the heavy lift.

For Educational Nonprofits

If you run an educational nonprofit, you already know how much time and energy go into showing the world that your work is making a difference. Grant applications ask for evidence. District partners ask for validation. Donors want to see results. Without credible evidence, it becomes harder to secure the backing your program deserves.

Here’s the challenge: Collecting that proof can be expensive, time-consuming, and hard to execute without disrupting the very work you’re trying to evaluate. And most nonprofits are already juggling more tasks than time allows.

That’s why independent effectiveness studies matter. When the data comes from a neutral third party—and when it reflects real conditions in schools—it speaks louder than any internal report ever could.

A MomentMN Snapshot Report gives you that edge.

- It offers credible, external proof of impact—the kind that strengthens your next proposal or annual report.

- It deepens your school partnerships by showing you’re committed to transparency and shared outcomes.

- It supports program validation across different sites, letting you see where your efforts are strongest.

- It feeds back into your work, offering insights that help refine services, improve outcomes, and drive smart expansion.

And because our reports rely on existing district data, there’s no extra load on your staff or your partners. That means you stay focused on serving students, not chasing metrics.

For EdTech Marketing Leaders

In the EdTech world, everyone says their product “drives engagement” or “improves outcomes.” But if you want to stand out, especially with decision-makers in large districts, you need more than a slick pitch. You need proof. Not from five years ago, not from a tiny trial, but from a real district using your product in everyday conditions.

A MomentMN Snapshot Report does exactly that.

It’s an independent evaluation of educational software based on real student outcomes. That means it doesn’t just boost your credibility. It gives you a powerful story to tell—one that resonates with buyers who’ve heard every claim in the book.

Here’s how it helps:

- Craft compelling case studies grounded in hard data, not marketing copy.

- Support your sales team with district-validated insights that set you apart.

- Use it across your K–12 EdTech marketing strategy to build trust with skeptical buyers.

- Guide product development by showing what’s working best and where improvements are needed.

- Strengthen your product validation in a market where credibility opens doors.

And because our evaluations move fast, you’ll have results ready for your next sales cycle, not after the window closes. That means you can move quickly, make changes, and continue to improve your product, thereby maximizing the impact you have on your target audience.

The Role of Rapid-Cycle Evaluations

Unfortunately, traditional educational impact evaluations move at a snail’s pace. Twelve months to deliver findings. A few more to interpret them. By then, your product has changed, the district has moved on, or the funding opportunity has dried up.

That’s why Parsimony offers rapid-cycle educational impact evaluations in the form of MomentMN Snapshot Reports: fast, low-burden studies that give you meaningful answers while they still matter.

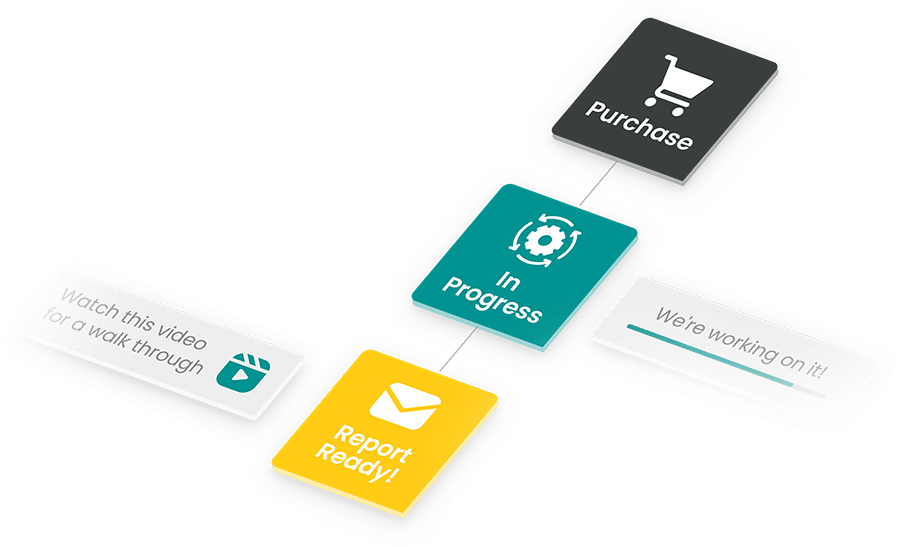

These Snapshot Reports:

- Deliver real insights in a matter of weeks

- Tap into existing district data so you’re not starting from scratch

- Avoid disruption to classrooms and reduce the load on district staff

- Adapt to your timeline, whether you’re preparing for renewal conversations, board presentations, or grant deadlines

And because they’re designed to be formative, not just summative, they don’t just help you measure impact. They help you make smart changes, show real value, and lead your next steps with confidence.

Experience the Power of Our MomentMN Snapshot Reports

You don’t need to wonder if your product is helping students. And you don’t need to lean on outdated data to tell your story. As more districts and funders demand real-world evidence before signing contracts or awarding grants, the distinction between “promising” and “proven” has never been more important.

With a MomentMN Snapshot Report, you get an independent impact evaluation tailored to the realities of K–12 education. Built on real district data. Delivered fast. Designed to be understood and shared.

Whether you’re managing a district, leading a nonprofit, or growing an EdTech brand, the smartest move is one backed by real evidence—and the kind of results your funders, partners, and decision-makers can rally behind.

Want to see what that looks like? Get a sample report and a custom walkthrough video to see how it works in action. It’s the easiest way to experience the clarity, credibility, and momentum MomentMN brings to every evaluation.